“I warned you guys in 1984 and you didn’t listen.”

These are the words of James Cameron, who was asked to weigh in on matters of AI in a recent CTV News interview. Cameron, of course, is a logical voice to seek out on the topic; after all, he’s the director behind Terminator and Terminator 2: Judgment Day.

The latter, an irrefutable classic by every measure, opens with the particularly chilling revelation that our planet has been rendered unrecognizable thanks to a battle against a self-aware artificial intelligence system called Skynet.

Leading the human resistance against the machines in the Terminator franchise is John Connor, a childhood version of whom is at the center of T2’s time-traveling rescue mission. At the core of Skynet’s actions is a view of us as being detrimental to our own existence, resulting in a full-scale assault on humankind.

In recent months, we’ve been met with a number of forward-looking statements of concern regarding the seemingly rapid development of generative AI and related tech ranging from calls for regulations to be put into place as soon as possible to outright human extinction. A great deal of these warnings, in fact, aren't all that dissimilar to the leveled landscape from those harrowing T2 sequences.

Below, we take a look at the still-in-progress wave of AI fears, including everything from its impact on the recent writers and actors strikes to what some experts argue is our possible (some even say likely) future.

What do we mean when we say "AI"?

You may have noticed the colloquial journey the term has gone on, particularly over the past year. In the more general sense, of course, AI is simply an abbreviation for artificial (as in not human) intelligence. More pedestrian, less threatening examples of AI or AI-adjacent developments being used in this context include spell check, Siri, and similar everyday programs.

But when people talk about the dangers of AI, they are more often than not referring to generative AI (ChatGPT, for example) and other more advanced examples of such tech. Expectedly, there has been confusion in connection with the present-day use of the term, including a recent example of widespread misreporting in connection with a Beatles project.

Who's been warning us?

At this point, it would be easy (though not wise) to gloss over AI-related warnings given how prevalent they've become. James Cameron, as mentioned above, was recently adamant about how he sees such risks potentially playing out.

“I mean, you’ve got to follow the money of who’s building these things, right? They’re either building it to dominate market shares—so what are you teaching it? Greed—or you’re building it for defensive purposes so you’re teaching it paranoia,” he told Vassy Kapelos of CTV News.

Terminator star Arnold Schwarzenegger has also connected the franchise with the current discussion surrounding AI, saying earlier this year that "it's not any more fantasy or kind of futuristic" but is instead "here today."

But Terminator alumni are far from the only prominent voices to have spoken out on the topic. Sam Altman, the CEO of ChatGPT developers OpenAI, said he was "nervous" about the risks during a Senate Judiciary Committee hearing in May.

"I think if this technology goes wrong, it can go quite wrong," Altman said at the time. "And we want to be vocal about that. We want to work with the government to prevent that from happening. But we try to be very clear-eyed about what the downside case is and the work that we have to do to mitigate that.”

Meanwhile, Dr. Geoffrey Hinton—a former Google worker widely referred to as the "godfather of AI"—went public with a similarly cautionary series of remarks about what could be ahead.

What's the worst that could happen?

Earlier this year, the Center for AI Safety shared a statement—signed by a group of experts and public figures—pointing to "extinction" concerns.

"Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war," the statement, signatories on which included Bill Gates and Ramy Youssef, read.

But sometimes it takes an accomplished storyteller to more vividly paint a picture, even when the story being told is one detailing quite tangible concerns. In the same aforementioned CTV interview, James Cameron imagined a worst case scenario involving destruction-ensuring exchange of nuclear firepower.

First urging those who share his worries to "follow the money," the filmmaker said such threats are either being built to "dominate" markets or to be used in the military industry.

"I think that we will get into the equivalent of a nuclear arms race with AI," Cameron predicted. "And if we don't build it, then the other guys are for sure gonna build it. ... You can imagine an AI in a combat theater, the whole thing just being fought by the computers at a speed that humans can no longer intercede. You have no ability to de-escalate and when you're dealing with the potential of it escalating to nuclear warfare, de-escalation is the name of the game. And having that pause, that timeout. But will they do that? The AIs will not."

Other perhaps more imminent threats include the continued proliferation of false news stories and similar claims through AI article generation (thus placing us all in a truly post-truth existence), the chance of governments not adequately understanding the ins and outs of AI before making moves to regulate it (this would indeed be a typical government move), and more.

Terminator isn't the only film being discussed amid such talk. Emad Mostaque, CEO of Stability AI, likened what he sees as a possible future to the Joaquin Phoenix-starring Spike Jonze film Her. Mostaque said his “personal belief” is that the future will mirror elements of that acclaimed story.

“Humans are a bit boring, and it’ll be like, ‘Goodbye’ and ‘You’re kind of boring,’” he told BBC News in May.

Central to all concerns thus far raised by those skeptical of AI's ability to be developed in an ethical manner is the question of control. Dr. Roman V. Yampolskiy, an AI expert who recently penned an op-ed on the topic for The Hill, called the issue a “high-risk, negative-reward situation.”

Among the slew of People Are Worried About AI pieces are comments from some experts who've taken a differing approach, including by highlighting what they argue is a low likelihood of any "worst case scenarios" actually coming to life.

But can AI be used for good?

In theory, sure. The better question is, can humans be trusted to use anything purely for good? As history has shown us time and time again, sometimes more glaringly than others, that’s a big no.

As humans, we are seemingly hardwired to pursue our own destruction. We may stop at various points along this journey, perhaps to offer up some ultimately self-serving warnings or to dabble in performative woe, but we always stay on the path.

This is especially true under capitalism.

What about jobs?

While fully unpacking this aspect of AI would best be served with an article of its own, a few key issues are readily apparent. Chief among them, of course, is a potentially substantial loss of jobs. Per a recent report from CBS News, citing data from a Challenger, Gray & Christmas-compiled report, nearly 4,000 jobs were lost in May 2023 in connection with the implementation of AI.

It's unclear, exactly, how this will continue to take shape in the months and years ahead. What's always clear here in the States, however, is that our current refusal to overhaul how we view basic income, the availability of everyday necessities, and our entire approach to work/life balance is not sustainable.

How has AI affected art and entertainment?

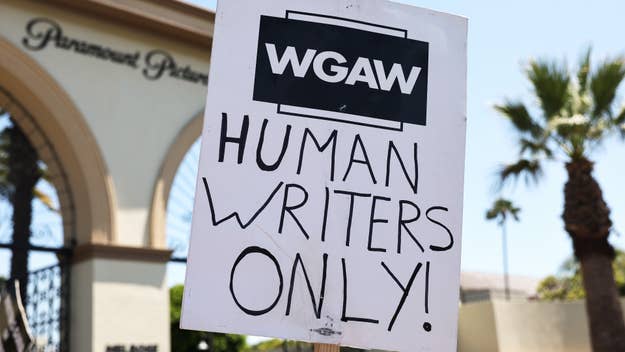

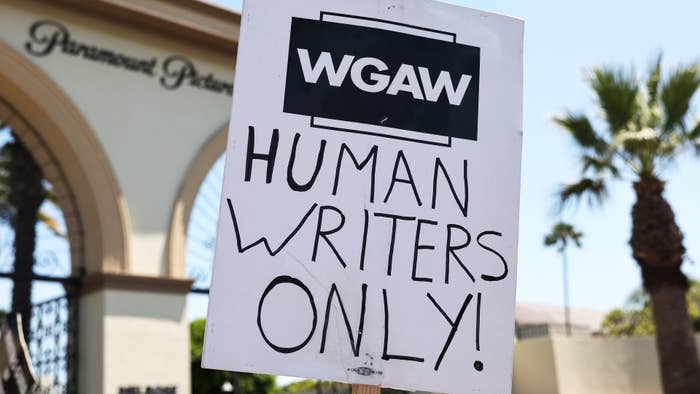

Per SAG-AFTRA president Fran Drescher, AI "poses an existential threat to creative professions, and all actors and performers deserve contract language that protects them from having their identity and talent exploited without consent and pay."

Actors went on strike in July, joining WGA members in demanding a fair and equitable deal from greed-driven studios. While AI isn't the only important issue at the center of stalled negotiations with the unions, it's a key one for both.

...and Allen Iverson plays into all of this how, exactly?

He doesn't, of course, but that hasn't stopped the jokes (the NBA legend is often referred to by his initials, prompting a slew of tongue-in-cheek remarks from fans as AI headlines have increased). Like the bulk of human behavior, we can chalk this trend up to sheer existential dread and our inherent need for constant coping that's certain to continue until humanity sings its last song.